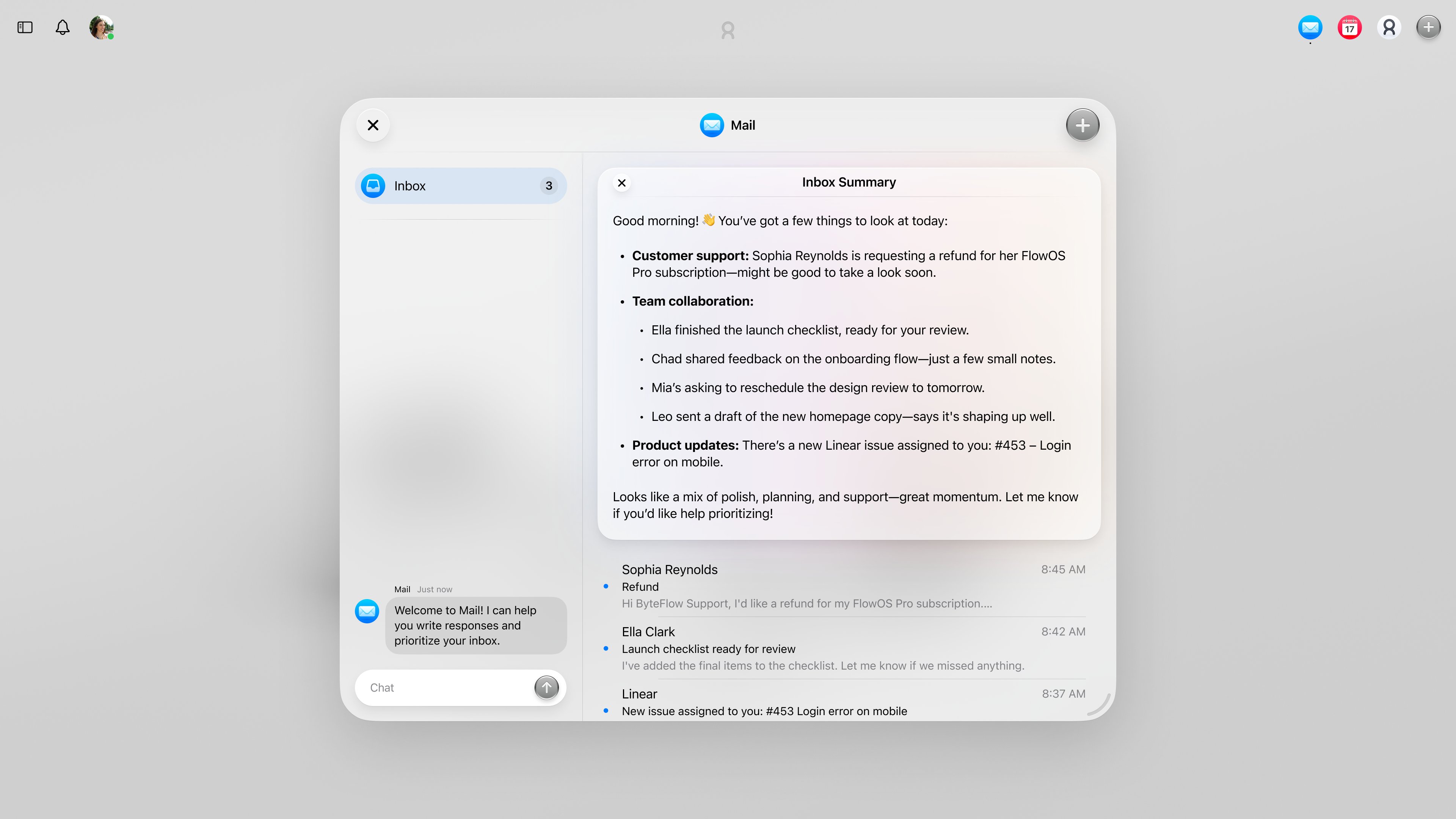

I developed Spaces, a desktop-like workspace interface that changes how users interact with Cobots in a collaborative environment. This feature transforms the traditional chatbot interface into an intuitive, multi-window desktop experience.

Building a window management system

When creating a desktop-like interface in the browser, we need to account for multiple overlapping windows with proper z-index layering and focus management.

For this project, I built a custom window management system using React hooks where each window tracks its own position, size, and focus state,

and those states are saved to localStorage so the layout survives a reload. This meant that I could close my browser, come back later, and

find my workspace exactly as I left it.

For performance, I used useRef caching and React.memo to avoid unnecessary re-renders, and used transforms and opacity for

animations to keep interactions at 60fps even with multiple windows moving around. I also implemented an most recently used

ordering system so the last window you click always floats above the others. This helped with tricky layering issues where windows

would get buried incorrectly, and it mimicked the behavior people expect from macOS.

Drag-and-drop implementation

Drag-and-drop was another area where I wanted to make sure it was really polished similar to what you would find in a desktop environment.

I used react-draggable as a base but added our own constraints and event handling. For example, dragging only works from a defined

.drag-handle region (aka the header), which prevents users from accidentally moving the window when they’re just trying to interact

with its contents. When a drag starts, the system immediately brings that window into focus, so it never feels like you’re moving

a “dead” layer in the background.

To prevent windows from vanishing off-screen, I calculated viewport bounds using getBoundingClientRect(). If a user tried to drag too far,

the system gently constrained the window, and on resize, invalid positions were automatically corrected. I also added fallback handling

for corrupted localStorage data, so the app would gracefully recover instead of breaking.

Implementing dynamic agent integration

A big part of Cobot is the ability to have multiple agents working together. This is done by having a registry of agents that can be used to create a workspace.

The architecture for this agent registry include a system to support both UI-enabled agents and traditional chat-based interfaces (that later would be more generative).

I created a reusable component BaseWindow that abstracts all the complex window behavior (focus, drag, resize, positioning) behind a reusable interface.

From there, spinning up a new agent type (like Mail or Calendar) is as simple as extending BaseWindow and plugging in the agent’s specific UI. I followed

a factory pattern to centralize window creation, which keeps the codebase maintainable. Each new agent type just needs to register its config, and the

system knows how to render it. This made the architecture scalable and let me experiment quickly with different cobots, like a Google intergration or a Notion MCP connection.

In addition, each BaseWindow component checks agent connection status before rendering windows in order to handle authentication flows gracefully.

Extra interactions & user experience

I also spent time on subtler interaction details. For instance, clicks on the background aren’t treated the same as clicks on the dock or inside a window. The system can distinguish between these contexts, so clicking empty space unfocuses windows, while dock clicks toggle the sidebar without breaking focus order. These small decisions create a more intuitive, desktop-like experience inside the browser.